GET 70% Discount on All Products

Coupon code: "Board70"

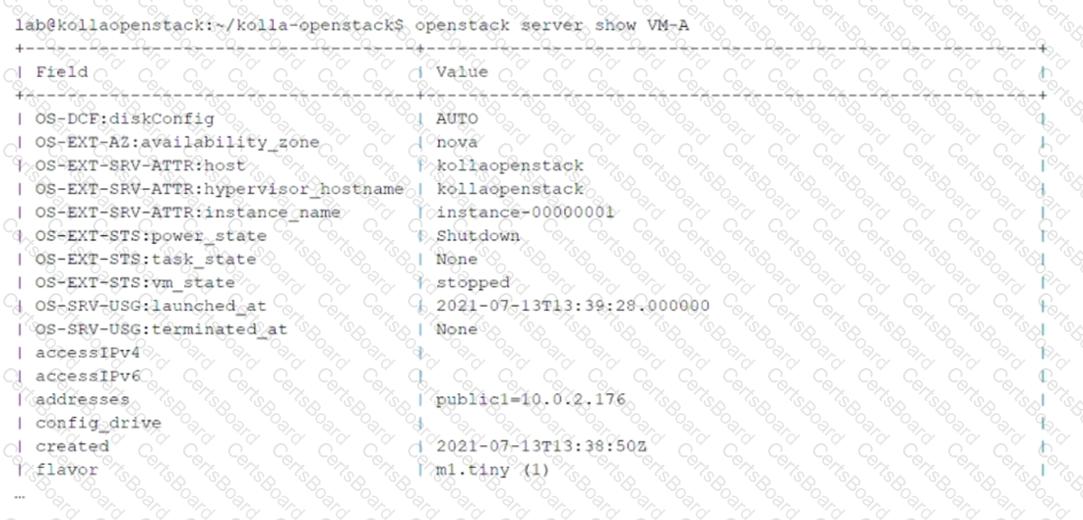

Click the Exhibit button.

You have issued theopenstack server show VM-Acommand and received the output shown in the exhibit.

To which virtual network is the VM-A instance attached?

m1.tiny

public1

Nova

kollaopenstack

Theopenstack server showcommand provides detailed information about a specific virtual machine (VM) instance in OpenStack. The output includes details such as the instance name, network attachments, power state, and more. Let’s analyze the question and options:

Key Information from the Exhibit:

Theaddressesfield in the output shows

public1=10.0.2.176

This indicates that the VM-A instance is attached to the virtual network namedpublic1, with an assigned IP address of10.0.2.176.

Option Analysis:

A. m1.tiny

Incorrect: m1.tinyrefers to the flavor of the VM, which specifies the resource allocation (e.g., CPU, memory, disk). It is unrelated to the virtual network.

B. public1

Correct:Theaddressesfield explicitly states that the VM-A instance is attached to thepublic1virtual network.

C. Nova

Incorrect:Nova is the OpenStack compute service that manages VM instances. It is not a virtual network.

D. kollaopenstack

Incorrect: kollaopenstackappears in the output as the hostname or project name but does not represent a virtual network.

Why public1?

Network Attachment:Theaddressesfield in the output directly identifies the virtual network (public1) to which the VM-A instance is attached.

IP Address Assignment:The IP address (10.0.2.176) confirms that the VM is connected to thepublic1network.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack commands and outputs, including theopenstack server showcommand. Recognizing how virtual networks are represented in OpenStack is essential for managing VM connectivity.

For example, Juniper Contrail integrates with OpenStack Neutron to provide advanced networking features for virtual networks likepublic1.

Which two consoles are provided by the OpenShift Web UI? (Choose two.)

administrator console

developer console

operational console

management console

OpenShift provides a web-based user interface (Web UI) that offers two distinct consoles tailored to different user roles. Let’s analyze each option:

A. administrator console

Correct:

Theadministrator consoleis designed for cluster administrators. It provides tools for managing cluster resources, configuring infrastructure, monitoring performance, and enforcing security policies.

B. developer console

Correct:

Thedeveloper consoleis designed for application developers. It focuses on building, deploying, and managing applications, including creating projects, defining pipelines, and monitoring application health.

C. operational console

Incorrect:

There is no "operational console" in OpenShift. This term does not correspond to any official OpenShift Web UI component.

D. management console

Incorrect:

While "management console" might sound generic, OpenShift specifically refers to the administrator console for management tasks. This term is not officially used in the OpenShift Web UI.

Why These Consoles?

Administrator Console:Provides a centralized interface for managing the cluster's infrastructure and ensuring smooth operation.

Developer Console:Empowers developers to focus on application development without needing to interact with low-level infrastructure details.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenShift's Web UI and its role in cluster management and application development. Recognizing the differences between the administrator and developer consoles is essential for effective collaboration in OpenShift environments.

For example, Juniper Contrail integrates with OpenShift to provide advanced networking features, leveraging both consoles for seamless operation.

Regarding the third-party CNI in OpenShift, which statement is correct?

In OpenShift, you can remove and install a third-party CNI after the cluster has been deployed.

In OpenShift, you must specify the third-party CNI to be installed during the initial cluster deployment.

OpenShift does not support third-party CNIs.

In OpenShift, you can have multiple third-party CNIs installed simultaneously.

OpenShift supports third-party Container Network Interfaces (CNIs) to provide advanced networking capabilities. However, there are specific requirements and limitations when using third-party CNIs. Let’s analyze each statement:

A. In OpenShift, you can remove and install a third-party CNI after the cluster has been deployed.

Incorrect:

OpenShift does not allow you to change or replace the CNI plugin after the cluster has been deployed. The CNI plugin must be specified during the initial deployment.

B. In OpenShift, you must specify the third-party CNI to be installed during the initial cluster deployment.

Correct:

OpenShift requires you to select and configure the desired CNI plugin (e.g., Calico, Cilium) during the initial cluster deployment. Once the cluster is deployed, changing the CNI plugin is not supported.

C. OpenShift does not support third-party CNIs.

Incorrect:

OpenShift supports third-party CNIs as alternatives to the default SDN (Software-Defined Networking) solution. This flexibility allows users to choose the best networking solution for their environment.

D. In OpenShift, you can have multiple third-party CNIs installed simultaneously.

Incorrect:

OpenShift does not support running multiple CNIs simultaneously. Only one CNI plugin can be active at a time, whether it is the default SDN or a third-party CNI.

Why This Statement?

Initial Configuration Requirement:OpenShift enforces the selection of a CNI plugin during the initial deployment to ensure consistent and stable networking across the cluster.

Stability and Compatibility:Changing the CNI plugin after deployment could lead to network inconsistencies and compatibility issues, which is why it is not allowed.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenShift networking, including the use of third-party CNIs. Understanding the limitations and requirements for CNI plugins is essential for deploying and managing OpenShift clusters effectively.

For example, Juniper Contrail can be integrated as a third-party CNI in OpenShift to provide advanced networking and security features, but it must be specified during the initial deployment.

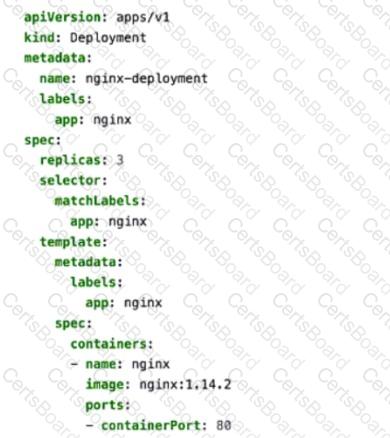

Click the Exhibit button.

You apply the manifest file shown in the exhibit.

Which two statements are correct in this scenario? (Choose two.)

The created pods are receiving traffic on port 80.

This manifest is used to create a deployment.

This manifest is used to create a deploymentConfig.

Four pods are created as a result of applying this manifest.

The provided YAML manifest defines a Kubernetes Deployment object that creates and manages a set of pods running the NGINX web server. Let’s analyze each statement in detail:

A. The created pods are receiving traffic on port 80.

Correct:

The containerPort: 80 field in the manifest specifies that the NGINX container listens on port 80 for incoming traffic.

While this does not expose the pods externally, it ensures that the application inside the pod (NGINX) is configured to receive traffic on port 80.

B. This manifest is used to create a deployment.

Correct:

The kind: Deployment field explicitly indicates that this manifest is used to create a Kubernetes Deployment .

Deployments are used to manage the desired state of pods, including scaling, rolling updates, and self-healing.

C. This manifest is used to create a deploymentConfig.

Incorrect:

deploymentConfig is a concept specific to OpenShift, not standard Kubernetes. In OpenShift, deploymentConfig provides additional features like triggers and lifecycle hooks, but this manifest uses the standard Kubernetes Deployment object.

D. Four pods are created as a result of applying this manifest.

Incorrect:

The replicas: 3 field in the manifest specifies that the Deployment will create three replicas of the NGINX pod. Therefore, only three pods are created, not four.

Why These Statements?

Traffic on Port 80:

The containerPort: 80 field ensures that the NGINX application inside the pod listens on port 80. This is critical for the application to function as a web server.

Deployment Object:

The kind: Deployment field confirms that this manifest creates a Kubernetes Deployment, which manages the lifecycle of the pods.

Replica Count:

The replicas: 3 field explicitly states that three pods will be created. Any assumption of four pods is incorrect.

Additional Context:

Kubernetes Deployments:Deployments are one of the most common Kubernetes objects used to manage stateless applications. They ensure that the desired number of pod replicas is always running and can handle updates or rollbacks seamlessly.

Ports in Kubernetes:The containerPort field in the pod specification defines the port on which thecontainerized application listens. However, to expose the pods externally, a Kubernetes Service (e.g., NodePort, LoadBalancer) must be created.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes concepts, including Deployments, Pods, and networking. Understanding how Deployments work and how ports are configured is essential for managing containerized applications in cloud environments.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking and security features for Deployments like the one described in the exhibit.

You must provide tunneling in the overlay that supports multipath capabilities.

Which two protocols provide this function? (Choose two.)

MPLSoGRE

VXLAN

VPN

MPLSoUDP

In cloud networking, overlay networks are used to create virtualized networks that abstract the underlying physical infrastructure. To supportmultipath capabilities, certain protocols provide efficient tunneling mechanisms. Let’s analyze each option:

A. MPLSoGRE

Incorrect:MPLS over GRE (MPLSoGRE) is a tunneling protocol that encapsulates MPLS packets within GRE tunnels. While it supports MPLS traffic, it does not inherently provide multipath capabilities.

B. VXLAN

Correct:VXLAN (Virtual Extensible LAN) is an overlay protocol that encapsulates Layer 2 Ethernet frames within UDP packets. It supports multipath capabilities by leveraging the Equal-Cost Multi-Path (ECMP) routing in the underlay network. VXLAN is widely used in cloud environments for extending Layer 2 networks across data centers.

C. VPN

Incorrect:Virtual Private Networks (VPNs) are used to securely connect remote networks or users over public networks. They do not inherently provide multipath capabilities or overlay tunneling for virtual networks.

D. MPLSoUDP

Correct:MPLS over UDP (MPLSoUDP) is a tunneling protocol that encapsulates MPLS packets within UDP packets. Like VXLAN, it supports multipath capabilities by utilizing ECMP in the underlay network. MPLSoUDP is often used in service provider environments for scalable and flexible network architectures.

Why These Protocols?

VXLAN:Provides Layer 2 extension and supports multipath forwarding, making it ideal for large-scale cloud deployments.

MPLSoUDP:Combines the benefits of MPLS with UDP encapsulation, enabling efficient multipath routing in overlay networks.

JNCIA Cloud References:

The JNCIA-Cloud certification covers overlay networking protocols like VXLAN and MPLSoUDP as part of its curriculum on cloud architectures. Understanding these protocols is essential for designing scalable and resilient virtual networks.

For example, Juniper Contrail uses VXLAN to extend virtual networks across distributed environments, ensuring seamless communication and high availability.

Which OpenShift resource represents a Kubernetes namespace?

Project

ResourceQuota

Build

Operator

OpenShift is a Kubernetes-based container platform that introduces additional abstractions and terminologies. Let’s analyze each option:

A. Project

Correct:

In OpenShift, aProjectrepresents a Kubernetes namespace with additional capabilities. It provides a logical grouping of resources and enables multi-tenancy by isolating resources between projects.

B. ResourceQuota

Incorrect:

AResourceQuotais a Kubernetes object that limits the amount of resources (e.g., CPU, memory) that can be consumed within a namespace. While it is used within a project, it is not the same as a namespace.

C. Build

Incorrect:

ABuildis an OpenShift-specific resource used to transform source code into container images. It is unrelated to namespaces or projects.

D. Operator

Incorrect:

AnOperatoris a Kubernetes extension that automates the management of complex applications. It operates within a namespace but does not represent a namespace itself.

Why Project?

Namespace Abstraction:OpenShift Projects extend Kubernetes namespaces by adding features like user roles, quotas, and lifecycle management.

Multi-Tenancy:Projects enable organizations to isolate workloads and resources for different teams or applications.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenShift and its integration with Kubernetes. Understanding the relationship between Projects and namespaces is essential for managing OpenShift environments.

For example, Juniper Contrail integrates with OpenShift to provide advanced networking and security features for Projects, ensuring secure and efficient resource isolation.

Which OpenStack node runs the network agents?

block storage

controller

object storage

compute

In OpenStack, network agents are responsible for managing networking tasks such as DHCP, routing, and firewall rules. These agents run on specific nodes within the OpenStack environment. Let’s analyze each option:

A. block storage

Incorrect:Block storage nodes host the Cinder service, which provides persistent storage volumes for virtual machines. They do not run network agents.

B. controller

Incorrect:Controller nodes host core services like Keystone (identity), Horizon (dashboard), and Glance (image service). While some networking services (e.g., Neutron server) may reside on the controller node, the actual network agents typically do not run here.

C. object storage

Incorrect:Object storage nodes host the Swift service, which provides scalable object storage. They are unrelated to running network agents.

D. compute

Correct:Compute nodes run the Nova compute service, which manages virtual machine instances. Additionally, compute nodes host network agents (e.g., L3 agent, DHCP agent, and metadata agent) to handle networking tasks for VMs running on the node.

Why Compute Nodes?

Proximity to VMs:Network agents run on compute nodes to ensure efficient communication with VMs hosted on the same node.

Decentralized Architecture:By distributing network agents across compute nodes, OpenStack achieves scalability and fault tolerance.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenStack architecture, including the roles of compute nodes and network agents. Understanding where network agents run is essential for managing OpenStack networking effectively.

For example, Juniper Contrail integrates with OpenStack Neutron to provide advanced networking features, leveraging network agents on compute nodes for traffic management.

Your organization manages all of its sales through the Salesforce CRM solution.

In this scenario, which cloud service model are they using?

Storage as a Service (STaas)

Software as a Service (Saa

Platform as a Service (Paa)

Infrastructure as a Service (IaaS)

Cloud service models define how services are delivered and managed in a cloud environment. The three primary models are:

Infrastructure as a Service (IaaS):Provides virtualized computing resources such as servers, storage, and networking over the internet. Examples include Amazon EC2 and Microsoft Azure Virtual Machines.

Platform as a Service (PaaS):Provides a platform for developers to build, deploy, and manage applications without worrying about the underlying infrastructure. Examples include Google App Engine and Microsoft Azure App Services.

Software as a Service (SaaS):Delivers fully functional applications over the internet, eliminating the need for users to install or maintain software locally. Examples include Salesforce CRM, Google Workspace, and Microsoft Office 365.

In this scenario, the organization is using Salesforce CRM, which is a SaaS solution. Salesforce provides a complete customer relationship management (CRM) application that is accessible via a web browser, with no need for the organization to manage the underlying infrastructure or application code.

Why SaaS?

No Infrastructure Management:The customer does not need to worry about provisioning servers, databases, or networking components.

Fully Managed Application:Salesforce handles updates, patches, and maintenance, ensuring the application is always up-to-date.

Accessibility:Users can access Salesforce CRM from any device with an internet connection.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding the different cloud service models and their use cases. SaaS is particularly relevant in scenarios where organizations want to leverage pre-built applications without the complexity of managing infrastructure or development platforms.

For example, Juniper’s cloud solutions often integrate with SaaS platforms like Salesforce to provide secure connectivity and enhanced functionality. Understanding the role of SaaS in cloud architectures is essential for designing and implementing cloud-based solutions.

Which two statements are correct about the Kubernetes networking model? (Choose two.)

Pods are allowed to communicate if they are only in the default namespaces.

Pods are not allowed to communicate if they are in different namespaces.

Full communication between pods is allowed across nodes without requiring NAT.

Each pod has its own IP address in a flat, shared networking namespace.

Kubernetes networking is designed to provide seamless communication between pods, regardless of their location in the cluster. Let’s analyze each statement:

A. Pods are allowed to communicate if they are only in the default namespaces.

Incorrect:Pods can communicate with each other regardless of the namespace they belong to. Namespaces are used for logical grouping and isolation but do not restrict inter-pod communication.

B. Pods are not allowed to communicate if they are in different namespaces.

Incorrect:Pods in different namespaces can communicate with each other as long as there are no network policies restricting such communication. Namespaces do not inherently block communication.

C. Full communication between pods is allowed across nodes without requiring NAT.

Correct:Kubernetes networking is designed so that pods can communicate directly with each other across nodes without Network Address Translation (NAT). Each pod has a unique IP address, and the underlying network ensures direct communication.

D. Each pod has its own IP address in a flat, shared networking namespace.

Correct:In Kubernetes, each pod is assigned a unique IP address in a flat network space. This allows pods to communicate with each other as if they were on the same network, regardless of the node they are running on.

Why These Statements?

Flat Networking Model:Kubernetes uses a flat networking model where each pod gets its own IP address, simplifying communication and eliminating the need for NAT.

Cross-Node Communication:The design ensures that pods can communicate seamlessly across nodes, enabling scalable and distributed applications.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes Kubernetes networking concepts, including pod-to-pod communication and the flat networking model. Understanding these principles is essential for designing and managing Kubernetes clusters.

For example, Juniper Contrail provides advanced networking features for Kubernetes, ensuring efficient and secure pod communication across nodes.

Which two statements describe a multitenant cloud? (Choose two.)

Tenants are aware of other tenants using their shared resources.

Servers, network, and storage are separated per tenant.

The entities of each tenant are isolated from one another.

Multiple customers of a cloud vendor have access to their own dedicated hardware.

Amultitenant cloudis a cloud architecture where multiple customers (tenants) share the same physical infrastructure or platform while maintaining logical isolation. Let’s analyze each statement:

A. Tenants are aware of other tenants using their shared resources.

Incorrect:In a multitenant cloud, tenants are logically isolated from one another. While they may share underlying physical resources (e.g., servers, storage), they are unaware of other tenants and cannot access their data or applications. This isolation ensures security and privacy.

B. Servers, network, and storage are separated per tenant.

Incorrect:In a multitenant cloud, resources such as servers, network, and storage are shared among tenants. The separation is logical, not physical. For example, virtualization technologies like hypervisors and software-defined networking (SDN) are used to create isolated environments for each tenant.

C. The entities of each tenant are isolated from one another.

Correct:Logical isolation is a fundamental characteristic of multitenancy. Each tenant’s data, applications, and configurations are isolated to prevent unauthorized access or interference. Technologies like virtual private clouds (VPCs) and network segmentation ensure this isolation.

D. Multiple customers of a cloud vendor have access to their own dedicated hardware.

Correct:While multitenancy typically involves shared resources, some cloud vendors offer dedicated hardware options for customers with strict compliance or performance requirements. For example, AWS offers "Dedicated Instances" or "Dedicated Hosts," which provide dedicated physical servers for specific tenants within a multitenant environment.

JNCIA Cloud References:

The Juniper Networks Certified Associate - Cloud (JNCIA-Cloud) curriculum discusses multitenancy as a key feature of cloud computing. Multitenancy enables efficient resource utilization and cost savings by allowing multiple tenants to share infrastructure while maintaining isolation.

For example, Juniper Contrail supports multitenancy by providing features like VPCs, network overlays, and tenant isolation. These capabilities ensure that each tenant has a secure and independent environment within a shared infrastructure.

TESTED 24 Feb 2026

Copyright © 2014-2026 CertsBoard. All Rights Reserved